projects

This is a selection of my work, created to explore new domains and test my interests (a compass of sorts for gauging my interests). These projects don’t always align with my main research focus I focus on in work.

I’ll aim to keep this updated with open-source projects and publications (many are currently under review). While I can’t share proprietary company work, I may highlight general features or concepts when they become public or commercial if I belive it could be of broader interest.

Pet Robot

At Konpanion, our pet robot Maah was created to address the challenge of loneliness among the elderly. I was an applied researcher, where I created the MIND that went on to the robot.

My work drew on research from many fields including human-robot interaction, animal behaviour, cognitive architectures, perception, and game agent design. Rather than focusing solely on technical methods, I explore broader questions: How can our robot behave like real animals—emotionally, cognitively, and socially? How does it interpret sensory input to understand human states? How can it form memories, make plans, and develop a personality shaped by experience?

Some of the interesting challenges were focusing on how the models could adapt over time to allow each pet to develop a unique personality and emotional responses. In addition, how to leverage multimodal inputs to understand the emotional state of the user. This would also help communicate a patient's state to caregivers and nurses.

Also, Maah looks a bit unusual, and that was done on purpose to make sure it is comforting and non-threatening to users.

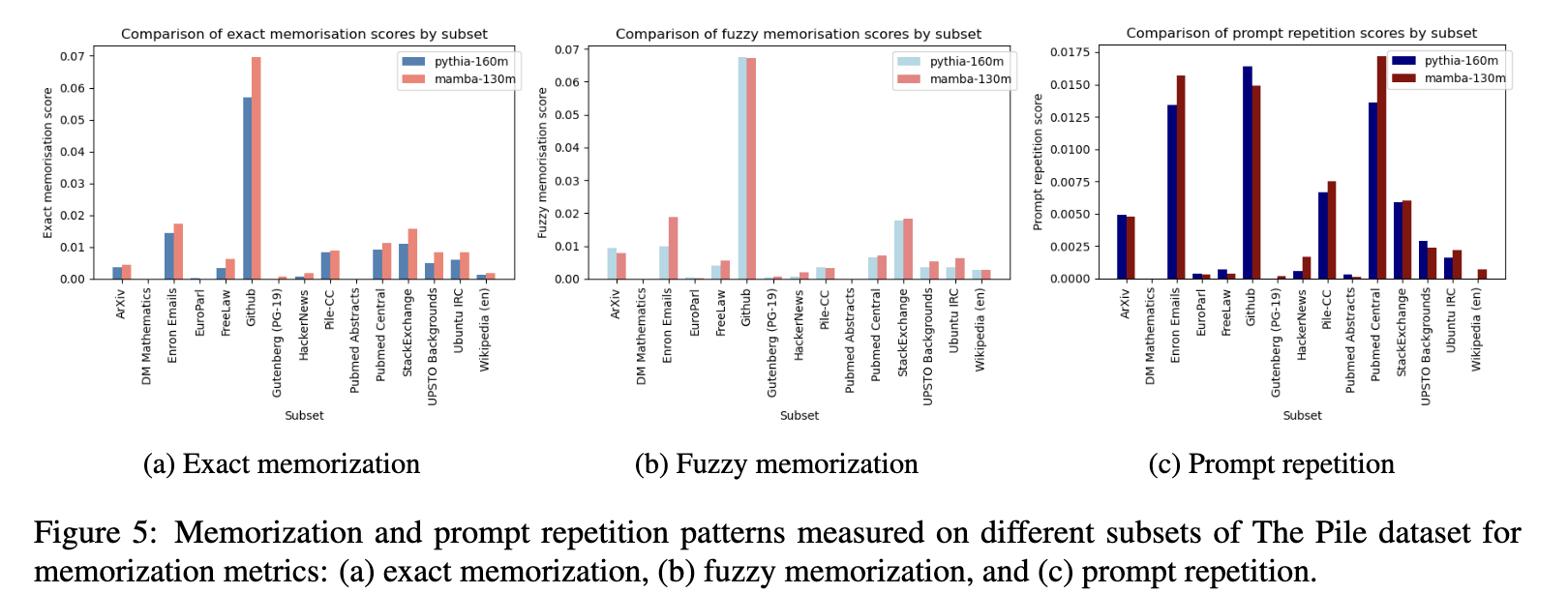

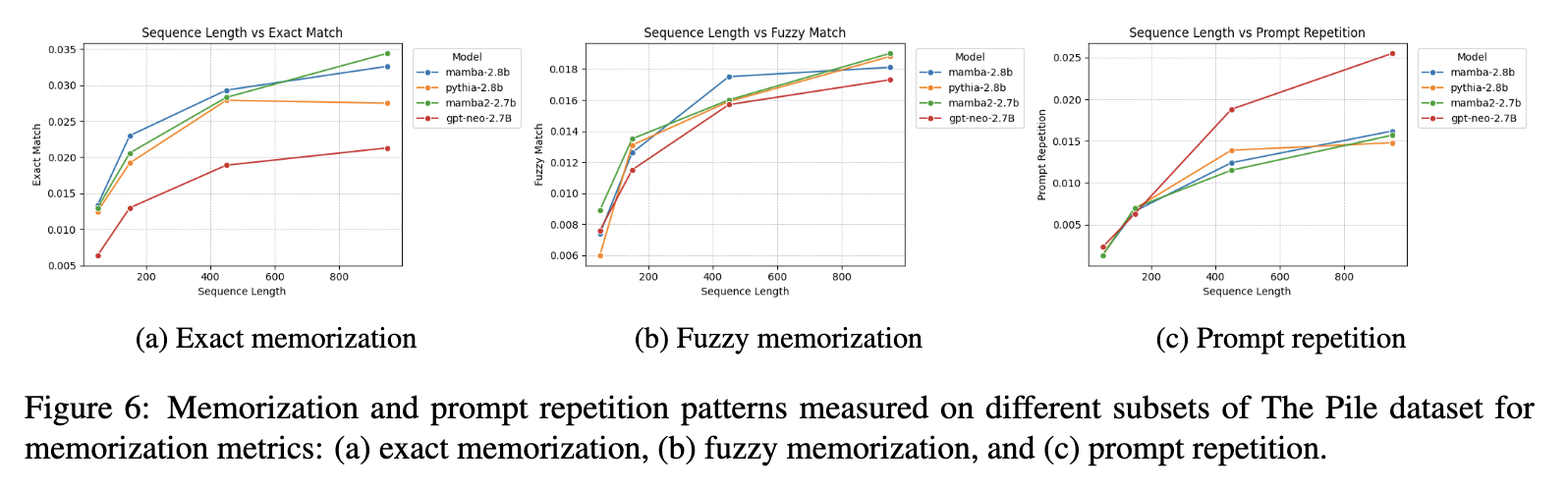

LLM Extraction Attacks

Investigated privacy vulnerabilities in LLM architectures SSMs to transformers through data extraction attacks and ICL 'attacks' where ICL attacks have private information.

Trends find that these models scale similarly to transformers with input-size, but retain higher levels of memorization across general extraction attacks.

Currently under review, more will be added soon.

Winter Wonderland - OpenGL 3d scene

A 3D navigable scene created using OpenGL, featuring a winter wonderland with snow, candycanes, and a snowman. The scene includes lighting effects, textures, and basic animations.

This project leveraged OpenGL for rendering, GLSL for shaders, and C++ for the application logic.

While some of the .obj and .dae files were sourced from open source projects, some were created by

me in blender.

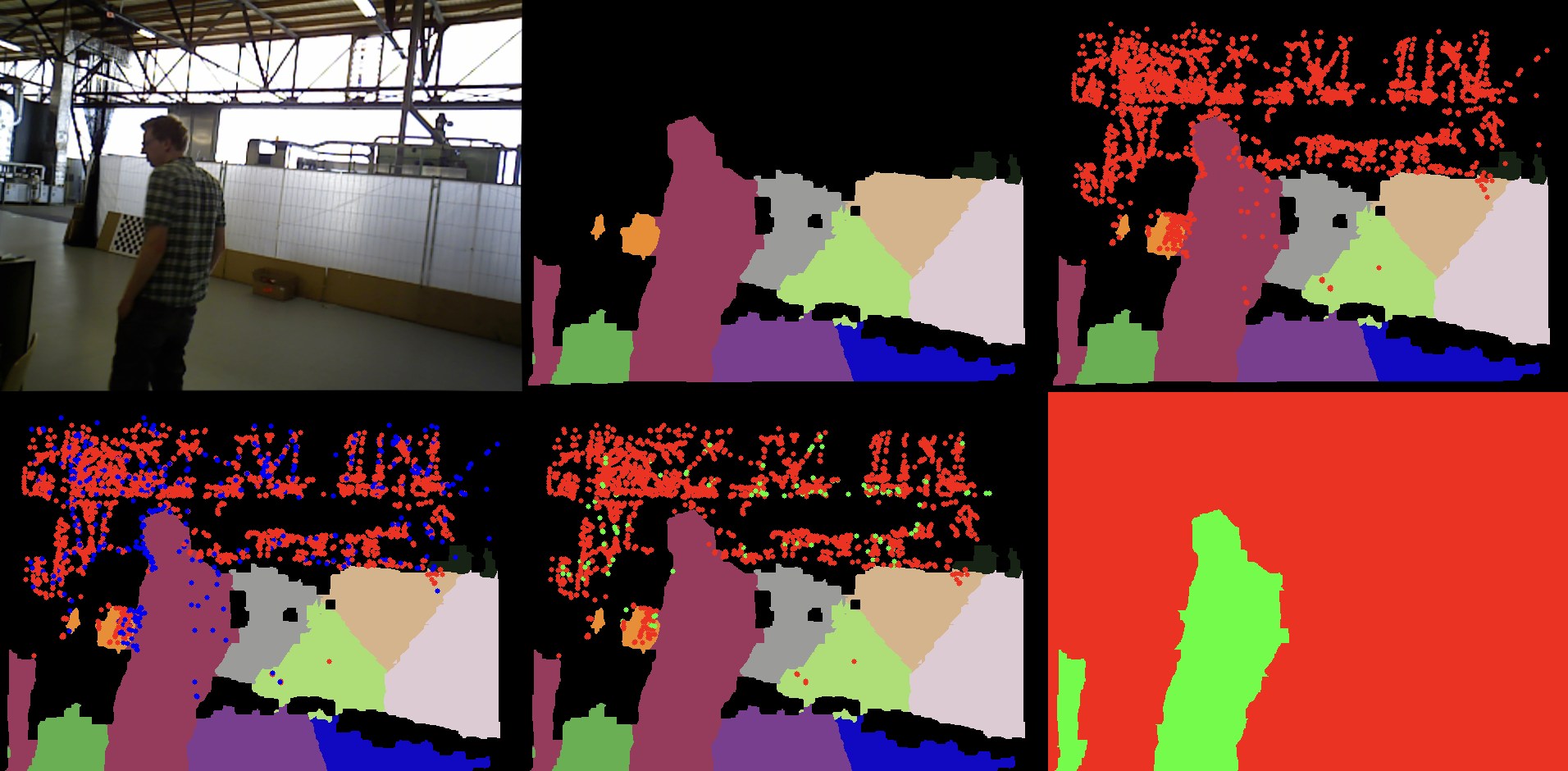

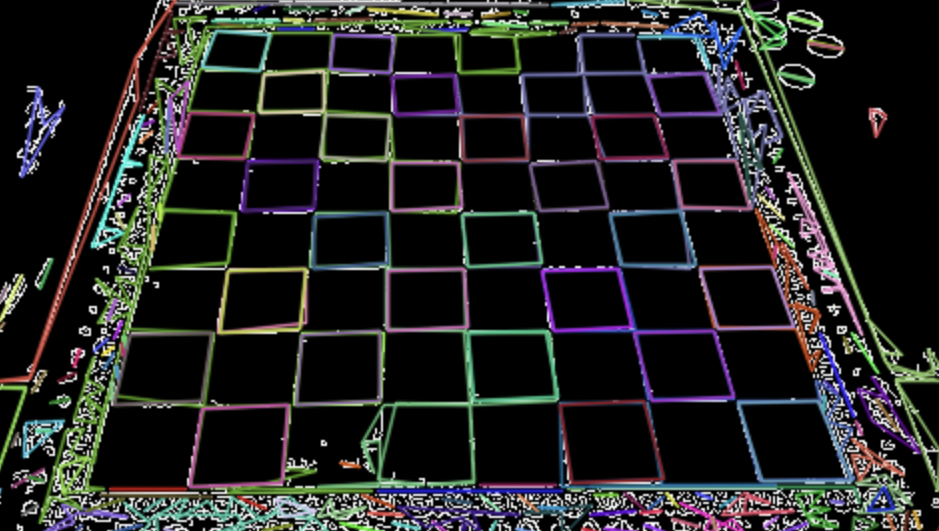

Draughts Vision Tracker

A computer vision system to analyze draughts (checkers) gameplay from video and static images. This five-part pipeline includes pixel classification, piece detection, board transformation, motion analysis, and King piece identification.

- Pixel Classification: Converted images to HSV, performed back projection with histograms, and applied connected components analysis for classifying each pixel as board square or piece.

- Piece Detection: Used perspective transforms and square-center validation to classify pieces with 97% accuracy.

- Video Tracking: Applied Gaussian Mixture Models to isolate low-motion frames and detect moves frame-by-frame with 84% accuracy.

- Corner & Edge Detection: Compared Hough Lines, contour segmentation, and OpenCV's chessboard detection for board registration.

- King Detection: Investigated Hough Circles and shape heuristics for detecting Kings via board position and features.

Developed in Python using OpenCV, NumPy, and Matplotlib. Used real-game footage and annotated datasets to evaluate the system's performance.

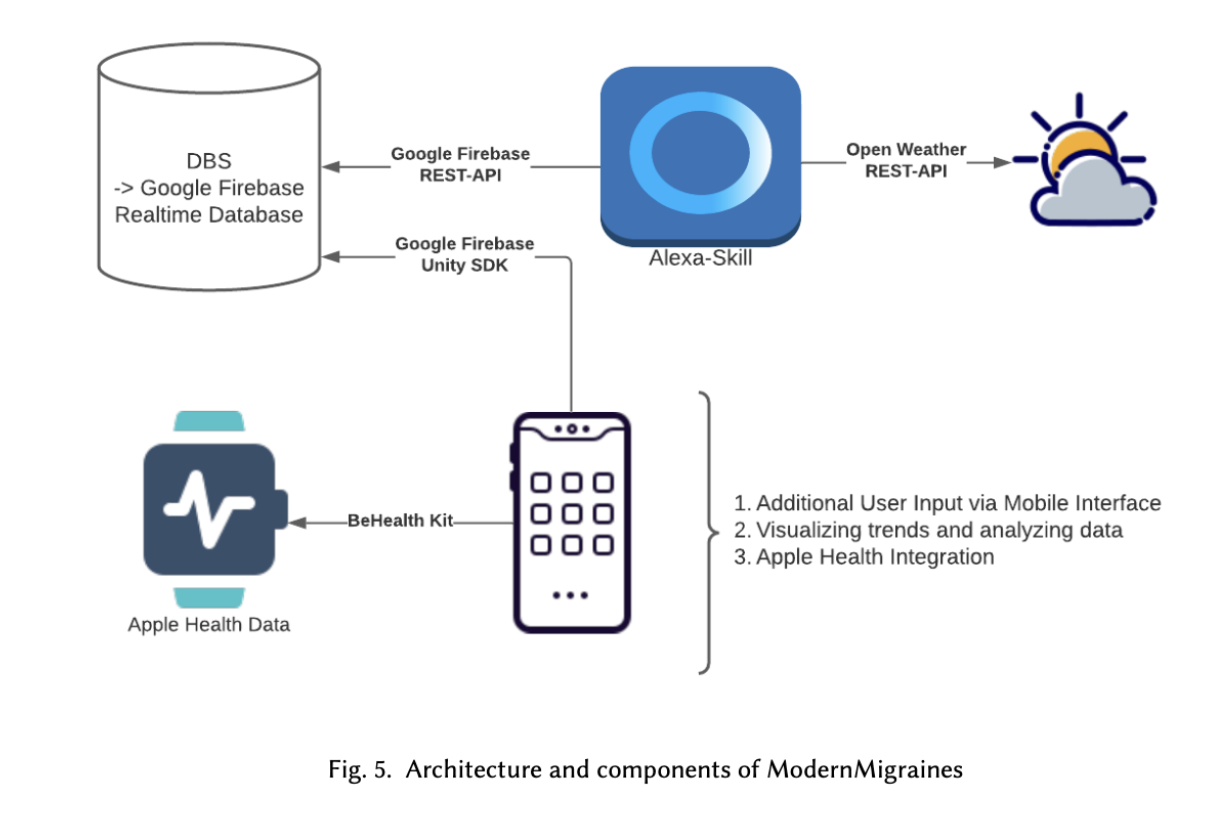

Migraine Tracker/Predictor - ModernMigraines

Took a project-based research course that focuses on using cheap sensors and networked multimodal devices to create novel and thoughtful technologies.

Ideated, designed, and productionalized a personalized migraine tracking and prediction system

integrating

embedded

sensors and voice through adaptive learning. The system blends a variety of triggers for

migraines(e.g. sleep, weather, pressure) to predict and track migraines. Users can use the app,

Alexa, and a watch/health integration to log their migraines and triggers, and the system will learn

from this data to predict future migraines.