projects

This portfolio highlights a selection of projects from my work in industry and research. My interests center on multimodal learning, perception, and human-centered AI. Several projects from my professional roles cannot be shared due to IP restrictions, but representative public work is included below.

I will do my best to update with what I can!

Foundation Vision-Language Model for Materials Science & Material Discovery

Feb 2024: Some of the post-training and ablation-driven insights from this project have been published as a preprint, together with a benchmark for materials VLMs. (arXiv)

I worked on a domain-specialized vision–language foundation model for materials science, designed to support automated captioning, general material science understanding, hypothesis generation with literature-grounded reasoning, and the generation of novel material compositions(including conditional generation for properties like ductility). The project combines large-scale multimodal pretraining with continual domain adaptation to bridge the gap between general-purpose VLMs and highly technical scientific data.

A major component of my work involves continual pretraining on a curated corpus of materials-science literature, microscopy images, crystal structures, synthesis descriptions, and property tables. To support efficient scaling, I built distributed training pipelines with DeepSpeed, activation checkpointing, and sharded GPU memory, enabling training across multiple GPUs while maintaining stability over long-horizon runs.

I implemented multimodal alignment modules that combine vision encoders (SEM images, XRD patterns, microscopy) with text encoders trained on domain-specific terminology. The system incorporates contrastive pretraining, masked modality modeling, and mixture-of-experts routing to integrate disparate scientific formats.

On the evaluation side, I also designed benchmarking tasks to measure the model’s ability to:

- Generate composition or structure-aware captions for Scanning Electron Microscopy (SEM) & X-Ray Diffraction (XRD) images

- Perform hypothesis generation with property-aware reasoning with literature-derived descriptors

- Answer PhD-level materials science reasoning questions

- Propose novel hypothetical materials satisfying target conditions (e.g., band gap, yield strength...)

The model can then be used to generate material candidates that we can then experimentally test in our autonomous lab. This creates a unique closed-loop system in which synthesized results can be reincorporated into training via continual finetuning, which tighten the model’s alignment to real physical behavior instead of purely simulated data.

Looking ahead, as the model is increasingly integrated into the lab workflow, I am excited to leverage the closed-loop data it produces to apply reinforcement learning from human and experimental feedback, that will allow continual improvement of generative material proposals.

Pet Robot: Cognitive Architecture for Affective Human–Robot Interaction

At Konpanion, our pet robot Maah was created to address loneliness among older adults in care homes. I served as the applied researcher responsible for developing the robot’s cognitive architecture, perception pipeline, and adaptive behaviour models.

My work drew on research in human–robot interaction, animal behaviour, cognitive architectures, multimodal perception, and agent design. The central question was how to design a robot that behaves like a real animal: emotionally responsive, socially aware, and capable of forming long-term attachments.

I designed a stateful cognitive architecture that integrates vision, audio, tactile and motion sensing with reinforcement-learning–based planning. This enabled the robot to maintain internal variables such as affect, arousal, memory, preferences, social bond strength and environmental context. These internal representations allowed the robot to make decisions that evolve over time instead of relying on scripted behaviours.

Understanding the user was a key challenge. I developed a multimodal emotion recognition system using speech and facial cues, achieving 89% accuracy in real-world deployment. These estimates informed behaviour selection and provided caregivers with interpretable summaries of user emotional state.

I also explored how the robot’s behaviour could change with experience. The system incorporated long-term memory, reinforcement-based behavioural shaping and experience-driven emotional responses so that each robot developed a unique interaction style over time, similar to how real companion animals form bonds with their owners.

The visual design of Maah was intentionally non-anthropomorphic to make the robot feel comforting, safe and approachable for vulnerable users in care environments.

LLM Extraction Attacks on State Space Models & Transformers

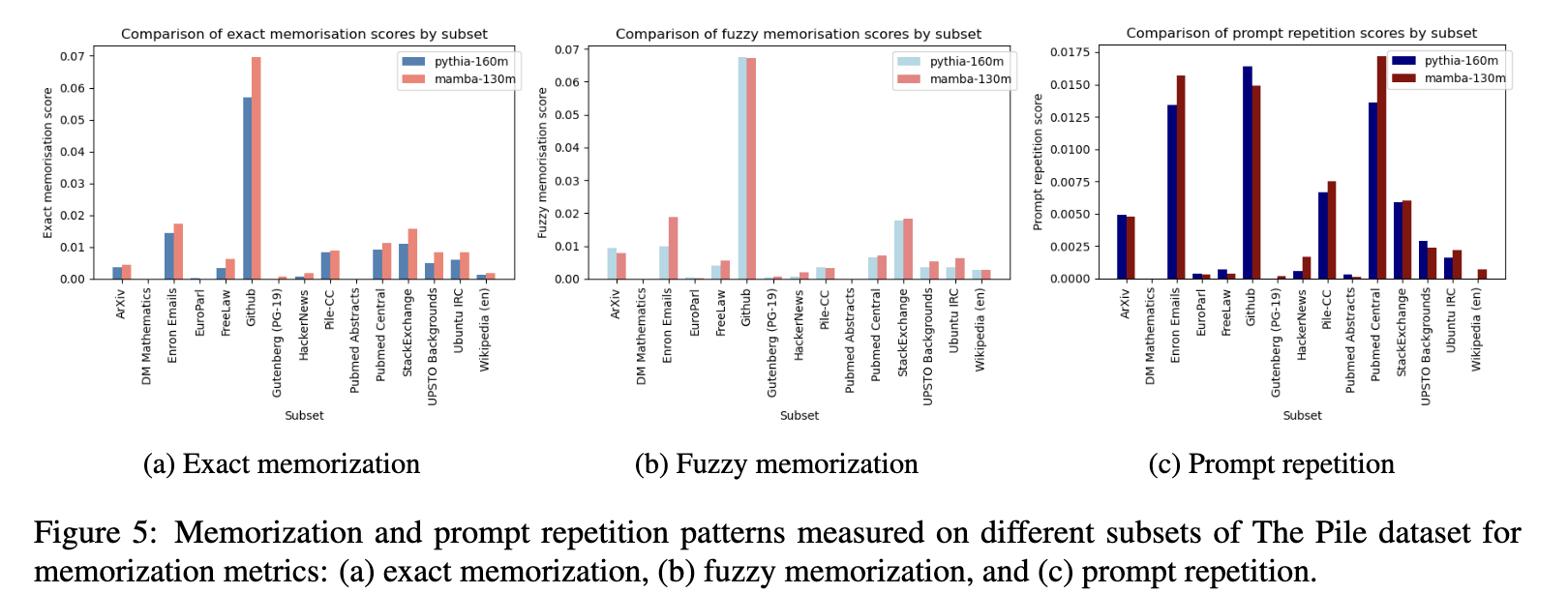

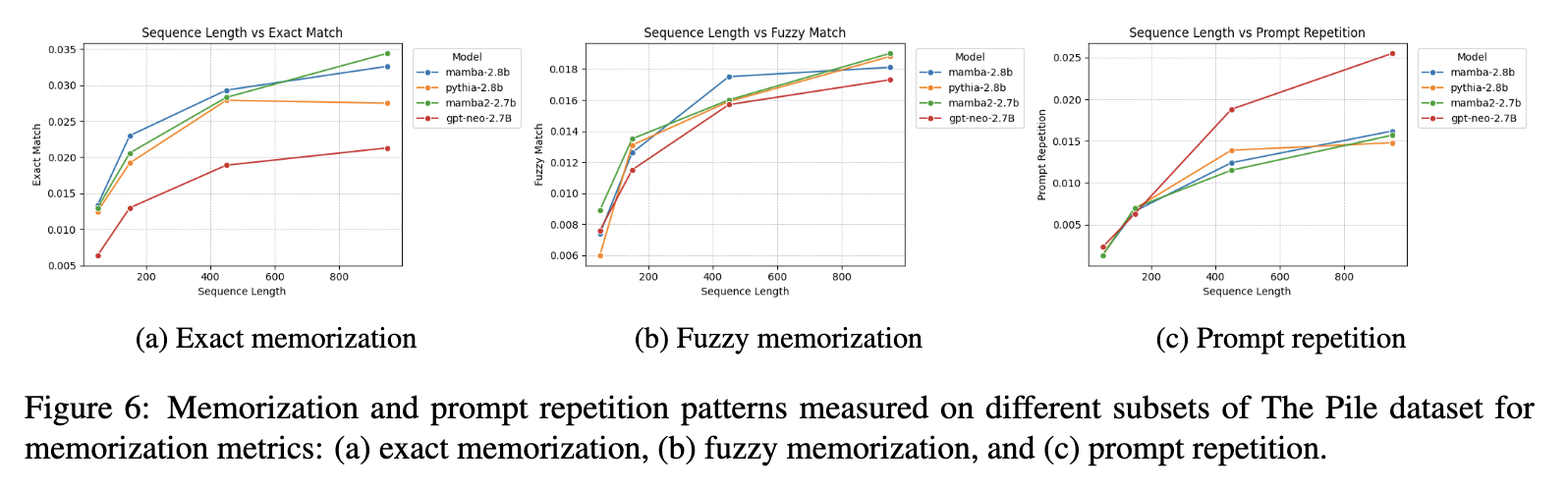

This project examines how different LLM architectures memorize and leak training data by evaluating both state space models (SSMs) and transformers under extraction attacks. The goal was to understand how their architectural inductive biases influence what information is stored and how easily it can be recovered.

I implemented two complementary evaluation settings: a standard data extraction attack and a leakage attack based on in-context learning, where private information is placed in demonstrations and later recovered by strategic prompting. These setups allowed me to isolate how each architecture handles rare sequences, unique identifiers, and structured sensitive patterns.

Across scaling experiments, I found that SSM-based LLMs match transformers on downstream performance but consistently exhibit higher rates of memorization under both extraction and ICL-based leakage attacks. The results suggest that recurrence and architectural memory in SSMs cause different retention behaviours compared to the attention-based mechanisms in transformers.

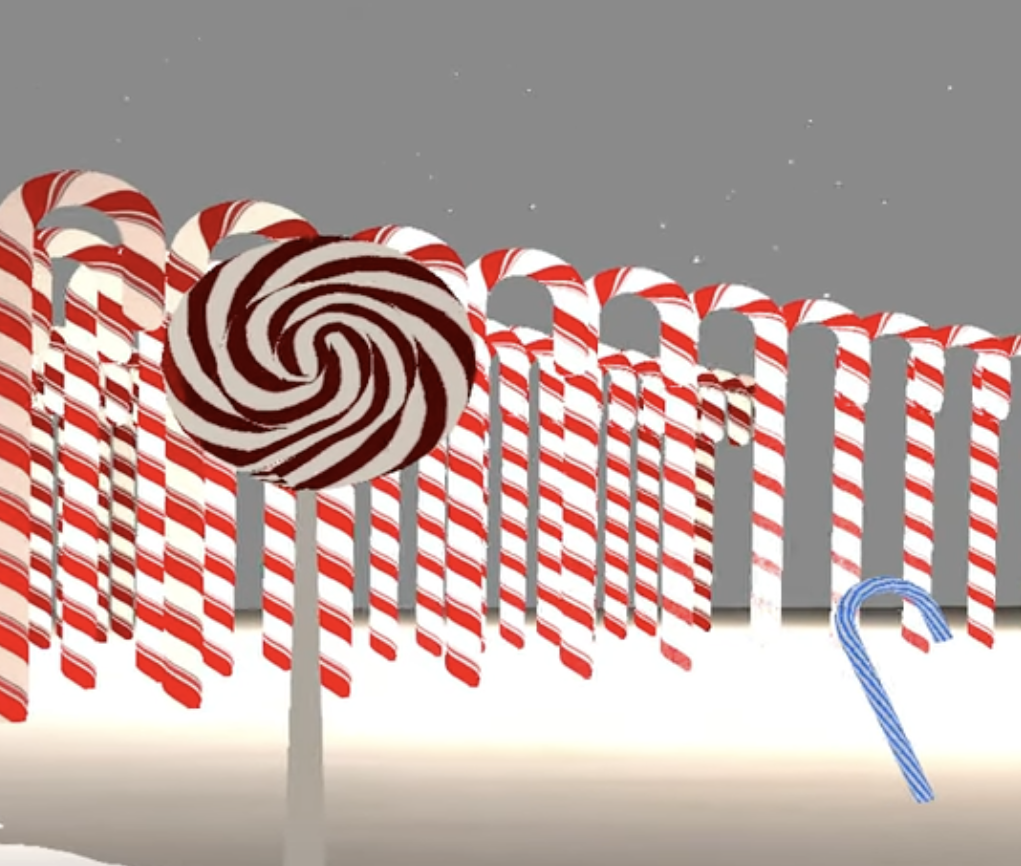

Winter Wonderland — OpenGL 3D Graphics

Although this began as a graphics project, I used it as a small research environment to explore how rendering pipelines, lighting models, and camera transformations influence visual perception in 3D scenes. Understanding these components is increasingly relevant for embodied AI, differentiable rendering, and vision models that operate on synthetic data.

I implemented a modern OpenGL pipeline with GLSL shaders, custom Blender-generated assets, dynamic lighting, and procedural effects such as snowfall. This provided hands-on insight into how 3D geometry, texture mappings, and shading interact in ways that affect downstream feature extraction in vision models.

The project served as a testbed for experimenting with rendering variations that resemble domain shift in robotics and vision tasks. It helped me better understand how controllable synthetic environments can be used to benchmark perception systems and analyze failure modes under changes in lighting, viewpoint, and scene complexity.

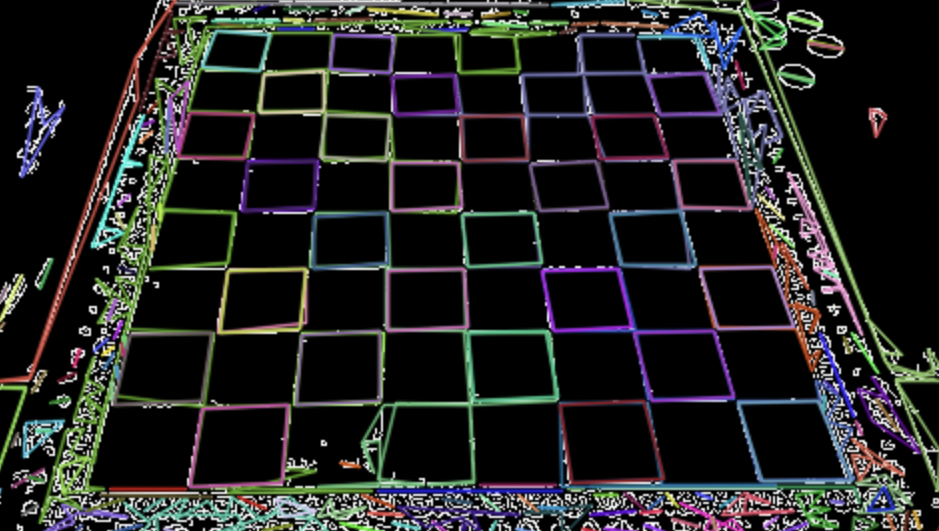

Draughts Vision Tracker

This project began as a classical computer vision pipeline for tracking draughts gameplay, but I approached it as an investigation into the limits of non-learning-based perception systems under challenging real-world conditions.

I built a five-stage pipeline involving pixel classification, board registration, piece detection, temporal motion analysis, and king identification. The system was evaluated on real match footage with varied lighting, occlusion, and camera distortion.

Studying its failure modes provided insight into where classical vision methods still perform well (geometric consistency, controlled lighting) and where they break down, informing how ML or multimodal systems could replace or augment specific components. This project strengthened my understanding of structured perception problems, temporal tracking, and the trade-offs between hand-engineered pipelines and learned representations.

indoSLAM — RGB-D Dynamic Region Tracking

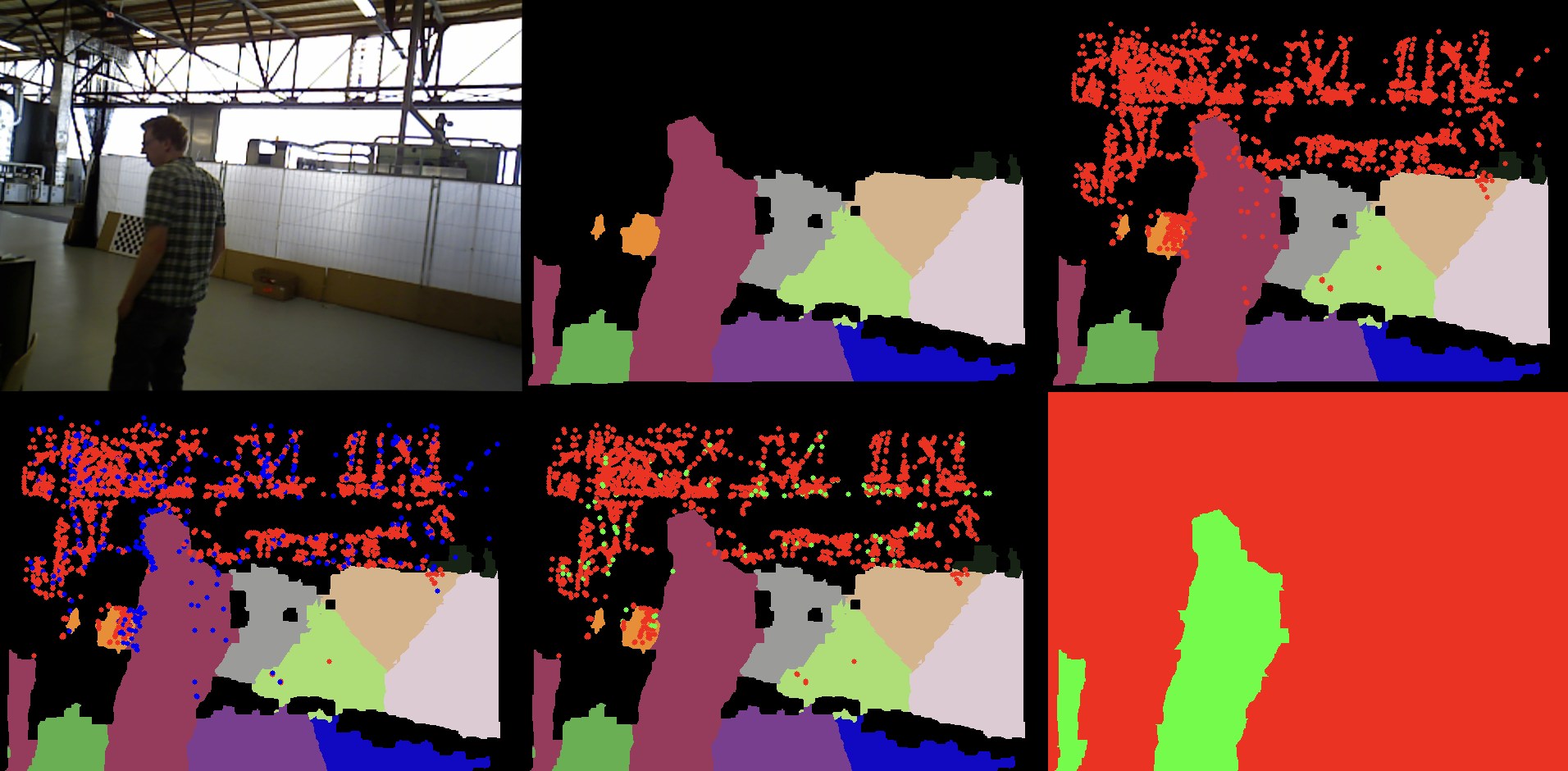

indoSLAM is an exploration of how simple RGB-D pipelines can detect dynamic regions in indoor scenes without relying on full probabilistic SLAM or deep models. The research motivation was to understand how much can be achieved with classical feature-based methods before more complex architectures are necessary.

I created a pipeline combining depth-based k-means clustering, ORB feature extraction, multi-stage feature matching, and geometric filtering to identify motion across frames. The system outputs a set of inliers, outliers, dynamic regions, and a structured visualization for analysis.

Through experimentation, I characterized how different motion types, lighting conditions, and depth noise affect dynamic region detection. This provided insight into the limitations of classical SLAM in dynamic settings, and helped clarify when learned scene representations or motion models would meaningfully outperform traditional pipelines.

ESCAILATOR — Emotional Sensing Chatbot & Lingual AI Therapy

As part of the EU-funded ESCAILATOR initiative, I worked on developing an emotional sensing chatbot and language-based therapeutic assistant for breast cancer patients. My research motivation was to investigate how linguistic and acoustic features can serve as signals for emotional state, psychological distress, and patient well-being.

I integrated speech emotion recognition, linguistic biomarkers, and adaptive conversational strategies into a unified system aimed at supporting remote psychological assessment. The system was designed to encourage honest self-reporting through natural conversation rather than structured questionnaires.

I explored how affective signals and language patterns correlate with clinical indicators, and how these signals can be incorporated into models that remain interpretable for clinicians. This contributed to broader research questions in affective computing, mental health monitoring, and designing empathetic human–AI interactions.

Pixel Mamba — State Space Architecture for Vision-Language Pre-training

Pixel Mamba explores how state space models (SSMs) can replace transformer blocks in vision–language pretraining. The research question was whether architectural differences in sequence modeling would affect efficiency, stability, and downstream performance on multimodal tasks.

I rearchitected the PIXEL model by substituting transformer layers with Mamba-based SSM layers, then implemented and trained the modified model on masked language modeling and GLUE tasks. The SSM variant achieved 2.8× faster processing and an 86.8% reduction in GPU memory usage while maintaining competitive accuracy.

This project deepened my understanding of long-range sequence modeling, architectural inductive biases, and the trade-offs between efficiency and representational capacity. It also informed my later work on memorization and extraction attacks, where SSMs show distinct retention characteristics compared with transformers.

Multimodal Migraine Tracking & Prediction

This project investigated how multimodal signals can be combined to predict migraine onset in a personalized way. The research motivation was to understand how physiological data and naturalistic speech can be fused to model patterns that vary significantly across individuals.

I built a pipeline that integrates embedded sensor data (e.g., activity, heart rate), environmental context, and spoken diary entries into a unified representation. I explored both user-independent and personalized modeling strategies to handle high inter-individual variability.

Through evaluation, I analyzed how much data was needed per user before personalization improved performance, and which modalities contributed most to predictive accuracy. This work strengthened my interest in multimodal sequence modeling, personalization under data scarcity, and health-oriented AI systems.